Latest from Industry Trends

Camera the Size of Quarter is 100% Ready to Disrupt Machine Vision

Manufacturers employ machine vision for tasks ranging from inspection and sorting to robot guidance and 3D mapping. As the list grows, engineers need smarter, smaller cameras. Next year, FLIR Systems' quarter-sized Firefly camera, which functions as a small, stand-alone vision system using deep learning, will answer the call.

The deep learning neural network can be trained to understand if images going by are 'correct' or 'not correct.' With this method, developers can quickly and easily develop systems to address complex and even subjective decisions. We asked Mike Fussell, FLIR's product marketing manager, why the industry should take notice of the tiny camera system.

NED [New Equipment Digest]: What is the Firefly camera and what industry/workers will find it most useful?

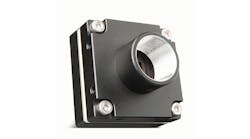

MF [Mike Fussell]: The Firefly camera allows system developers to deploy their trained neural network onto its integrated Intel Movidius Myriad 2 Vision Processing Unit (VPU) to possibly become a stand-alone vision system in a tiny 1 x 1 x 0.5-inch device.

To do the same thing today, you would need a camera, a processor (PC, SOC, or program in the cloud), and a VPU such as the Intel Movidius Compute Stick. The availability of tools from Google, Amazon, Intel, and Nvidia for creating and training neural networks is making the technology more accessible and empowering new players to enter established markets with competitive products.

NED: What are the main features of the camera?

MF: As a machine vision camera, its main features are excellent image quality and advanced automatic or precise manual control of imaging parameters to capture the exact images required. Firefly distinguishes itself from other machine vision cameras with its ability to perform deep learning inference on those images based on a user-supplied neural network, independent of a host system.

A standard machine vision camera is typically the size of an ice cube. The Firefly is roughly half the size of a standard machine vision camera. It's challenging to give the product justice in photographs. When we first showed the camera at tradeshows people were astounded by how small Firefly is.

NED: What's your favorite feature and why?

MF: Firefly's ability to perform deep learning inference on the images it captures on-camera with user-generated neural networks. With this ability, images no longer need to be sent to a host PC or cloud to make determinations. Instead, the camera captures images to use as the input into the on-camera neural network. The camera can transmit an 'answer' embedded in the image data, or as an electrical signal via an external connector.

An interesting use of Firefly is in smart pet doors. A neural network trained to recognize your pet could be loaded onto the camera. The camera would only signal the door to unlock when it recognizes your pet.

NED: How is the Firefly different than similar cameras?

MF: The combination of Firefly's open platform, on-camera inference, and its ultra-compact size. Not only does Firefly support on-camera inference, but it does so without restrictions on the deep learning frameworks or software which can be used. Its tiny size, lightweight, and low-power consumption make it ideal for embedding into portable devices.

NED: In pilot projects, what data supported that the Firefly would be useful?

MF: The Firefly, even without using the on-camera inference, is a very small, low-cost, yet powerful machine vision camera. With the Firefly, "inference on the edge" can be achieved. This means that system designers can use AI to analyze images and make decisions without a PC host or sending images to the cloud for analysis and decision-making. Instead, the decision-making is accomplished faster and more securely right on the camera.

NED: When was it developed and what manufacturing challenges is it meant to solve?

MF: The Firefly camera started development in 2018, and we are releasing it in 2019. Its support for on-camera inference gives machine designers an easy path to deploy deep learning inference into their applications. This will allow them to perform subjective image analysis tasks accurately without the need for the complex and time-consuming rules-based vision application programming used today.

NED: Anything else you'd like to add that readers might find interesting?

MF: If you're new to using deep learning, you can find a tutorial on our webpage www.flir.com/firefly called "How to build an image classification system for less than $600." It includes links for software download and code to paste in.

For anyone who has deployed their neural network onto a Movidius Compute Stick and its Myriad 2 processor, they can take that neural network and deploy it to the Firefly.