Deep Learning Spreads Automated Inspection to Assemblies, Packaging, and Kitting

Machine vision has been instrumental in automating quality assurance thanks to its ability to locate, identify, and inspect products through computational image analysis.

Conventional computational image analysis has its limits, however, which has traditionally limited application of machine vision to component inspections. When the component is part of a larger assembly, a complex package, or a kit — such as an automotive assembly, circuit board, or surgical kit—then random product placement, variations in lighting, and other factors can overwhelm the computations of conventional machine vision systems. Consequently, the final inspection of assemblies, packages, and kits has largely remained a manual operation.

While human inspectors excel at validating complex assemblies, their abilities are subject to fatigue. Studies show most operators can only focus on a single task for 15 to 20 minutes at a time. Qualified inspectors are also becoming increasingly harder to find. In 2018 more than 63% of manufacturers said they were having difficulty staffing their assembly lines, according to Assembly magazine’s 2018 State of the Profession report. That’s 16 percentage points higher than the previous year’s figure. It’s also worth noting that inspectors tasked with quality assurance often rise from the ranks of the best assemblers.

While labor shortfalls explain the motivation to find automated inspection solutions, manufacturers hoping to leverage machine vision still face several challenges when applying it to inspect assemblies, packages, and kits.

The Limits of Conventional Vision Systems

Vision solutions are limited in their ability to handle part-to-part variation, for example. Components tend to vary one from the other in a small way. This is not an issue if the variance is a bent or missing lead on a semiconductor chip. But it is much more difficult to program a machine vision system to detect variances among several components in a finished assembly, or even identify cosmetic differences on a single component, such as a blemish on a casted or machined part.

Conventional machine vision can also struggle to validate the correct relationship between multiple assembled parts. An automotive motor transmission, for instance, incorporates hundreds of individual parts and dozens of critical components. Programming a vision system to confirm the size, location, and position for every critical component is time-consuming and impractical.

If these challenges were not difficult enough for conventional machine vision systems, consider also that assembly, packaging, and kitting lines undergo regular changeovers in response to new sales and production requirements. With each changeover, new components may be introduced to a kit, or similar parts might be packaged in a different way to accommodate a specific customer’s needs. Programming and reprograming an inspection system for each new assembly, grouping, package, or kit would incur the same high offline engineering costs as for assemblies with high component counts.

Every Industry Values Assembly Verification

Almost every industry produces some type of assembly, packaging, or kitting application, either as a final inspection step, an interim inspection step prior to adding value, or some combination of both. Similarly, deep learning technology offers solutions that span virtually every market. Cognex is working with leaders across several verticals to optimize deep learning solutions for both final and in-line assembly verification.

Automotive assemblies that comprise thousands of individual components are emblematic of the need for more sophisticated inspection system. A single missing hose or plug can stall a production line, costing millions of dollars an hour. Worse, they may result in consumer safety issues, such as a seized engine or a fatally flawed brake system. Automakers negotiate contracts and their suppliers that closely stipulate acceptable levels of defects per shipment as well as the financial penalties due for failing to meet those levels. Subsequently, inadequate inspection to confirm that final assemblies are complete and correct can spell significant financial penalties, loss of key customers and costly product recalls.

Similar financial and safety risks occur in the food industry, as well as medical packaging and kitting. If someone with severe food allergies mistakenly ingests food containing nuts, for example, the result could be fatal. The dangers of incomplete surgical kits or incorrectly packaged medical supplies are obvious, and just as clearly unacceptable to manufacturer and customer alike.

Additionally, the high-volume production of electronics operates on razor-thin margins. A single missing screw in a laptop or cellphone screen assembly can result in thousands of dollars in lost revenue.

In each of these areas, Cognex is actively working with market leaders to develop deep learning solutions.

A Deep Learning Solution for Assembly Verification

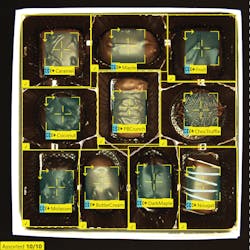

With the release of ViDi 3.4, Cognex’s deep learning vision software, automated inspection of complex assemblies, packages, and kits is not only possible but is significantly simplified compared to traditional machine vision solutions.

As part of the ViDi 3.4 release, Cognex added a Layout Model feature to the Blue Locate Tool. For assembly, packaging, and kitting applications, ViDi depends primarily on the Blue Tool and the Layout Model feature to locate and verify assemblies.

To understand how ViDi 3.4 solves the assembly challenge, consider the common challenges outlined earlier in this article: variability, mix, and changeover. Unlike traditional vision solutions, where multiple algorithms must be chosen, sequenced, programmed, and configured to identify and locate key features in an image, ViDi’s Blue Tool learns by analyzing images that have been graded and labeled by an experienced quality control technician. A single ViDi Blue Tool can be trained to recognize any number of products, as well as any number of component/assembly variations.

A car door panel assembly verification, for instance, includes checks for specific window switches and trim pieces depending on the door being assembled. The same factory can produce doors for different trim levels as well as for different countries. A single Blue Tool can be trained to locate and identify each type of window switch and trim piece by using an image set that introduces these different components. By training the tool over a range of images, it develops an understanding of what each component should look like and is able to locate and distinguish them in production.

Unlike conventional machine vision systems, which require different algorithms combined in different ways for each object of interest in an image, ViDi’s Blue Tool can locate any number of different components without explicit programming. By capturing a collection of images, it incorporates naturally occurring variation into the training, solving the challenges of both product variability and product mix during assembly verification.

Finally, to make sure that the correct type of window switches and trim pieces are installed, ViDi 3.4 uses Layout Models. With a Layout Model, the user simply draws different regions of interests in the image’s field of view to tell the system to look for a specific trained component (driver’s side window switches) in a specific location. The Layout Model is also accessible and configurable through the runtime interface. No additional off-line development is required, simplifying product changeovers.

Deep Learning Continues to Evolve

The number of factors required to verify the final assembly combined with the difficulty of training conventional machine vision systems to navigate broad variations has made it nearly impossible to automate final assembly inspection. Deep learning technology is rewriting that script, however. With deep learning tools, manufacturers in every industry can now automate the assembly verification process by breaking the application into two steps.

The first step is to train the deep learning neural network to locate each component type. The second step is to verify each component is of the correct type and in its correct location.

As manufacturers continue to employ deep learning tools, they can accumulate production images to re-train their systems to accommodate future manufacturing variances. This may help limit future liability in the event that unknown defects affect a product that has been shipped.

For more information download our free guide, Deep Learning Image Analysis for Assembly Verification.