Download this article in PDF format.

At a time when wireless dominates, Ethernet may seem like yesterday’s technology, but its presence can be felt everywhere. It will even be crucial in the delivery of 5G services, thanks in part to Power over Ethernet and the latest iteration of the specification, which boosts the total power available over a single connection to 90 W.

This is a marked increase over previous versions of PoE, allowing for more sophisticated connected devices to operate without the need for an offline power supply. Despite its clear benefits, many engineers may still have misgivings about adopting the technology in their next design, so let’s take a look at some of the myths that surround PoE and set about debunking them in a rational and reasoned way.

1. PoE isn’t Cost-Effective

This is probably the easiest myth to debunk, because PoE combines two essential services into a single cable. Power over Ethernet means exactly that: the same conductors are used for both power and communications, which means you really only need one cable instead of two. Plus, you don’t need to install a power outlet next to the equipment—or powered device (PD)—if one isn’t present, because all of the power is supplied by the router—or power sourcing equipment (PSE), as it’s known—over the Ethernet cable.

This means not only do you save on cable and cable-routing costs, but you can potentially save on the cost of installing a power outlet. Adding a PD to your infrastructure is also much simpler and quicker to achieve. Using PoE puts the power (no pun intended) of where to place equipment in the hands of the network manager, not the electrician or building maintenance team.

The efficiency of low-voltage conversion and distribution, coupled with the ability to manage power on a per-device basis, means the total cost of operation will also be easier to control, which should ultimately reduce the amount of power used.

2. It’s Difficult to Design With

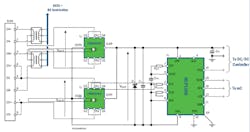

As with any standard-driven technology, providers like ON Semiconductor must deliver solutions that meet the requirements set by independent bodies. In this case, this includes reference designs (see figure below), with documentation, that complies with the Ethernet Alliance PoE Certification Program. A number of third-party test laboratories also can be used to confirm compliance.

These reference designs provide the ideal platform to start a design but still offer enough freedom for manufacturers to add their own value-add at the application layer. Using a reference design as the basis for a PoE PD will not only give design teams a reliable starting point, but it will also help guarantee their PD is interoperable with any PSEs on the market.

3. It Has Limited Application

That may have been true with earlier versions of the technology, but the latest specification (IEEE 802.3bt) increases the power available to a PD to 90 W. The semiconductor industry has been on an aggressive power curve for many years, driving down the power consumption of individual transistors. This allows integrated device manufacturers (IDMs) to do more with the less power, so we can see two factors at play: an increased amount of power available to a PD and PDs that require less power to do more.

What this means to most development engineers is that they now have a relatively large power budget to play with before needing to consider using offline power, which comes with the associated cost of design, bill of materials (BOM), and certification. When we consider these factors, it’s then fair to say that PoE can now meet the requirements of more applications than ever before.

4. You Can’t Mix Gigabit Ethernet Speeds With Power

This is perhaps a common misconception because the idea of putting communication protocols over power conductors has been around for some time, and in the past, it has introduced compromises on both sides. This really isn’t the case with PoE, thanks to the way the technology has been conceived and developed.

In this case, power is applied to the communications conductors using a common-mode voltage to each pair of conductors, while twisted-pair Ethernet uses differential signaling. The PD accesses the power by using the center tap on a standard Ethernet pulse transformer.

For 10 or 100-Mb/s data rates, PoE offers two methods for combining power and communications. The first option puts both power and data onto the same conductors, while the second puts them on separate twisted pairs. To reach 1 Gb/s, all conductors are needed for data, so the first is the only real option. To achieve 90 W, IEEE 802.3bt requires power to be added to all of the twisted pairs in an Ethernet cable, while Gigabit Ethernet also uses all four twisted pairs for data transmission. Therefore, delivering 90 W of power and Gigabit Ethernet speeds is entirely possible using IEEE 802.3bt-compatible Ethernet cable.

5. PoE Isn’t Suitable for the IoT

It’s true that PoE emerged almost in parallel with the IoT. However, in the early days, they probably didn’t mix. Several significant trends are now at play, though, which indicate the two technologies are perfectly matched.

First, the number of IoT endpoints is expected to increase year-on-year for at least the next five years. Many of these endpoints will be smart sensors or smart actuators—relatively small devices that need modest amounts of power but must absolutely be connected to the internet. Many wireless protocols popular today still battle with providing direct IP addressability, which means they must be connected using a local gateway that, in turn, will likely be connected to the internet using a wired Ethernet connection.

Adding PoE to the gateway can be a zero-overhead option. Wirelessly connected endpoints still require power, which will be applied either using primary cell batteries (and so require replacement) or using offline power (so they need an ac-dc converter). Using PoE would remove the need for the both primary cells and offline power, as well as the wireless connection.

Security in the IoT is a growing concern and one that’s closely associated with wireless connectivity, as the only barrier to connecting to an unsecured endpoint is proximity. With a wired Ethernet connection, security is implicit since it requires physical access to the endpoint.

Furthermore, the most popular wireless protocols used in the IoT operate in the license-exempt part of the spectrum, for obvious reasons. This has resulted in high levels of RF congestion and, while the protocols used are very good at dealing with this, it does introduce reliability and stability issues. It also potentially increases operational power due to the number of dropped packets that may need to be re-sent. PoE doesn’t suffer from the same issues and provides a much more reliable and stable communications channel.

And on the issue of power, PoE features modes that allow the amount of power drawn by a PD to fall to extremely low levels before assuming it has been removed, which means endpoints can exercise ultra-low-power modes to conserve system energy in a way that wireless endpoints can’t do so easily.

6. Power Dissipation in the Cable Is an Issue

The idea of transferring relatively high levels of power over conductors that were designed purely for data might be cause for concern amongst engineers who already deal with issues such as signal integrity and power dissipation. But it’s important to appreciate that PoE isn’t an afterthought that’s been retrospectively added in a way that stresses the underlying technology. The IEEE 802.3 specifications were developed by engineers who understand these issues and have taken them into account.

For example, the IEEE 802.3bt specification mandates that delivering 90 W of power require the use of all four twisted pairs within the cable. However, the standard also provides for a maximum cable length of 100 meters. When using only two twisted pairs (pair set) to deliver power, the maximum dc loop resistance of the pair set shouldn’t exceed 12.5 Ω. This is guaranteed by using a Category 5 cable or higher.

It’s clear, therefore, that power dissipation will not be an issue as long as the specification’s cable requirements are met.

7. The New Standard Requires New Hardware

As with most industry standards, maintaining backward compatibility is important. The IEEE 802.3bt specification is fully backwards-compatible with IEEE 802.3af (12.95 W at the PD) and IEEE 802.3at (25.50 W at the PD).

This means that implementing the new standard will not require a hardware change to all PSEs or PDs on an existing network. This is important, as it allows for a mixture of PoE standards to coexist in the same network.

8. A PD will still need an isolated power supply.

This isn’t mandated by the specification, but in some cases, it may be necessary to implement an isolated dc-dc topology. Typically, this is required if there’s no enclosure, leaving contacts exposed, or if isolation of the MDI leads from earth ground isn’t possible. If the PD is totally enclosed, a non-isolated dc-dc converter would be acceptable.

There’s no one answer to this; it will be application-dependent. For example, in some instances, it would be perfectly acceptable to use a non-isolated topology in a lighting application.

9. You need extra protection in your powered device.

Protection against voltage and current surges is necessary in any application, so PoE is no different in this respect. Good design practice would include the use of transient voltage suppressors, selected to provide the right level of protection. This will limit the voltage that can be applied to the PD and its components during power surges that may occur.

Using PoE doesn’t increase the risk of damage from power surges, but the technology isn’t immune to it. Thus, the need for protection is no greater than when using other forms of power delivery.

10. You Can’t Mix and Match Devices From Different Manufacturers

This may be the case with some wireless technologies based on profiles that are optional, but the IEEE 802.3bt standard was developed with interoperability in mind. This means any PSE will work with any PD, irrespective of the manufacturers.

Companies such as Sifos Technologies specialize in developing automated test-and-measurement equipment for PSE and PD testing. Meanwhile, the Interoperability Laboratory of the University of New Hampshire is the exclusive third-party test house for the Ethernet Alliance PoE Certification Program, enabling companies to certify their PSE and PD products in-house.

11. You Can’t Have PoE Without Ethernet

This is an understandable assumption, but in fact it’s possible to use the PoE connection purely for power delivery. Even though power is delivered alongside data, over the same conductors, the two coexist purely from a physical point of view. Any device that doesn’t require more than 30 W and has the right hardware interface would be able to extract power from the Ethernet cable and use it for any purpose, without the need to communicate back to the PSE. Any more power would require the device to be compliant with PoE PSE communication standards.

While this isn’t the way PoE is really intended to be used, it’s viable and further extends its potential.

Power and data are closely linked, particularly in the IoT. PoE has the potential to provide both to a new generation of devices that require high data rates and high levels of security. The ability to deliver up to 90 W of power to a device with gigabit data rates over a single cable that can be installed by network engineers will create new opportunities across all vertical markets.

Riley Beck is the Product Marketing Manager at ON Semiconductor.