In simple terms, machine vision enables computers to see. Most authorities in the industry define machine vision as the replacement of human visual perception and judgment with a camera, computer, and software to provide autonomous, noncontact image acquisition, analysis, and decision-making and obtain desired data to enhance or control an automated process.

The industry generally assigns the basic machine vision use cases into the following categories:

- Inspection: A visual inspection includes identifying objects and features, verifying assembly, detecting defects, and counting.

- Location and guidance: As in vision-guided robotics (VGR), the location of individual objects in a scene can be determined either relative to the scene or relative to a real-world coordinate system.

- Measurement: In 2D and 3D space, online metrology provides real-world measurements of objects/features to a specified precision.

- Identification, sorting, and reading: This category includes identifying and sorting objects and features in a scene, reading and understanding characters and code symbols (1D/2D), and sorting and counting objects according to geometry, color, or other characteristics.

Machine vision has a few key differentiators relative to other technologies. First, it's automated and noncontact. It always provides both automated acquisition and analysis. Other technologies, in contrast, involve only acquisition or only analysis.

Computer vision, for example, provides only analysis, while imaging involves only acquisition. Machine vision, which provides both, is virtually always a one-to-one image-to-process relationship. It's not typically a streaming technology, such as applied in autonomous driving, where the application involves streaming images and doing real-time processing.

Machine vision involves automated image acquisition and real-time processing based on a set of rules and parameters. By using digital cameras to acquire properly illuminated images and processing them in real-time, machine vision systems output decisions such as pass or fail, that are based on a defect that the vision system detected.

What's the Difference Between Machine Vision and Computer Vision?

Computer vision (CV) refers to the processing of images, with an emphasis on image analysis. While machine vision always involves the automated capture of images, that's not the case for CV. CV does not necessarily involve capturing an image, but it can interpret data from saved images and produce a result or set of results.

While computer vision emphasizes the ability to fully understand images by analyzing them in their totality, machine vision systems may only process a part of the image. Machine vision is always part of a larger machine system and provides vision capabilities for existing technologies such as those used in support of manufacturing applications in production or quality assurance.

Machine vision always delivers data and/or provides an answer or output to an automated process. Machine vision is not just about capturing images but also involves technologies and methods. Technologies are the hardware components such as cameras and illumination systems and methods refer to the software. Machine vision is also an engineering discipline, not a science like computer vision.

What Are the Four Types of Machine Vision Systems?

The operation of a machine vision system can take place in one or more dimensions. Instead of looking at the whole image, 1D vision systems analyze each line of the digital signal individually rather than looking at the whole image at once. It's common for these systems to look for the differences between the lines acquired before and after the current line.

A common application of 1D machine vision is for the detection and classification of defects on materials that are manufactured in a continuous web process, such as paper, metals, plastics, and nonwovens, and on materials such as float glass.

In contrast, 2D machine vision systems provide area scans that are more appropriate for discrete parts. 2D machine vision systems are the most common and are compatible with all types of software packages. The resolution range of 2D machine vision systems continues to expand. The majority of general-purpose applications require camera sensors with resolutions up to 5 MP, but sensors with 10, 20, or 30 MP and higher are becoming more common.

In contrast to 2D area array machine vision systems that take two-dimensional snapshots of objects, 2D line scan machine vision systems acquire images line-by-line and, as a result, require motion. An analogy to explain the difference between area scan and line scan systems is to compare photocopiers to fax machines. Area array cameras take snapshots of the entire field of view and don't require motion, similar to the way a photocopier needs to see the entire document to copy it. In contrast, a fax machine or document scanner creates an electronic copy of the document by scanning it line by line with motion, just like a line scan camera.

It's common to use line scan systems to inspect cylindrical objects, to satisfy high-resolution and/or high-speed inspection requirements, and to inspect continuously moving objects. In terms of cost, line scan cameras are less expensive, although they generally require more intense illumination and are more complicated to integrate due to the required encoder feedback.

3D machine vision systems sense and digitally process three-dimensional physical space. 3D machine vision captures three-dimensional data of the objects to provide information about the shape, volume, depth, and orientation of the object. A 3D machine vision system typically uses multiple cameras or at least one laser displacement sensor. Inspecting, measuring, and guiding robots are just a few of the applications where they are ideal.

What Is Machine Vision Lighting?

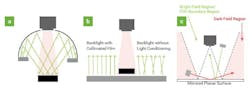

Machine vision lighting is an essential part of the machine vision system. For proper illumination of a target, a light source and its placement are important. By maximizing contrast or color information, machine vision lighting makes the machine vision system's task of analyzing the object or feature of interest more efficient.

What Are the Four Cornerstones of Machine Vision Lighting?

There are four image contrast enhancement concepts (cornerstones) used for vision illumination:

- Geometry: Spatial geometry is the relationship between an object and a light source.

- Pattern or structure: Light pattern or structure refers to how light is projected over an object or on it.

- Wavelength or color: Color or wavelength describes how light is differentially reflected and absorbed by an object and its immediate surroundings.

- Filters: Filters block or pass wavelengths and/or directions of light differentially.

These four cornerstones of image contrast provide a means for identifying four ways to enhance or create appropriate image contrast of parts against their backgrounds.

What Is the Right Light for Your Machine Vision Application?

In machine vision, LEDs, fluorescents, quartz-halogens, and Xenon are by far the most commonly used lighting sources, especially in small to medium-scale inspection stations, whereas metal halide and Xenon are typically deployed in large-scale or bright-field applications. In the past, fluorescent and quartz halogen lighting sources were most commonly used, but as LED technology has improved in stability, intensity, efficiency, and cost-effectiveness over the past 15 years, it has become the de facto standard for almost all mainstream machine vision applications.

Because LED-based machine vision lighting covers a range of sizes, wavelengths, and illumination characteristics, engineers have a lot of options when determining the best light source for a given project.

What Are the Three Types of LED Controllers for Machine Vision?

LED lighting controllers play an important role in achieving optimal image quality and machine vision system performance. In an era where machine vision is increasingly used to automate, inspect, and control quality, selecting the right LED lighting controller is vital to success.

Current source controllers come in a variety of types, ranging from simple units with limited features and low power output to more powerful models with more features and higher power output. Three controller types can be found on the market today:

- Embedded LED Controllers: An embedded LED controller, located inside the illuminator housing, is also known as an "in-head" controller or an "on-board" controller.

- External or Discrete LED Controllers: External LED controllers, also called discrete controllers, provide greater functionality and customization. They're fully disconnectable and can be mounted on the tabletop or the panel/DIN rail.

- Cable Inline LED Controllers: Inline LED controllers are fixed in the cable or with quick disconnects. They offer smart features in a compact design that's adaptable to tight spaces and offers a cost-effective compromise.

What Is the Difference Between Continuous, Gated, and Overdrive Strobe Control in Machine Vision Lighting?

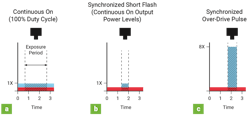

It's possible to reduce pixel blur during high-speed inspections of singulated or indexed parts by driving LEDs to strobe short, high-intensity pulses. Using LEDs continuously is self-explanatory and requires no other controls than those needed to assign a fixed intensity. However, to understand the distinctions, applications, and benefits of strobe versus gated operation, it's important to first define the terms.

An example of gated continuous illumination is strobing in commercial photography, which involves repeatedly switching on and off the power to a continuous light source. Duty cycle refers to the duration of time the light source is on compared to off. While continuous operation has a duty cycle of 100%, a gated light that's switched on for 100 milliseconds over a 1-second period will have a 10% duty cycle.

When applied to machine vision lighting, gated operation helps maintain the thermal equilibrium between light sources' on/off states, which improves efficiency and reduces the detrimental impacts of warm-up on LED performance. Machine vision inspections involving multipoint illumination often use gated illumination to prevent crossfire between back and front-light sources. Due to LED cooldown during periods when the LED is off, gated configurations typically emit pulse widths of tens of milliseconds, which are more efficient than continuous operation.

Unlike gated lighting, overdrive strobe lighting offers some nuanced but important capabilities, particularly when it comes to LED sources. Since LEDs are solid-state semiconductor light sources, they can be controlled more precisely in terms of duration and pulse shape. The most important thing is that LEDs can be overdriven to deliver much more intense pulses than can be achieved with either continuous or gated operation.

Using strobe overdrive on LED illuminators directs more current through the device at lower duty cycles. By reducing the duty cycle from 1% to as low as 0.1%, LED pulse lengths in overdrive mode can range from a few milliseconds to 1 microsecond, allowing the LEDs to dissipate heat properly between each high-current pulse to prevent damage or degradation. LED overdrive pulses can range from 1 to 2 microseconds, but the optimal return on overdrive capacity is between 50 and 500 microseconds, assuming a duty cycle of 2% or less.

For demanding machine vision applications, such microsecond pulse widths minimize pixel blur during high-speed inspections, reduce ambient light effects, and enable many other powerful machine vision illumination techniques that are in current use today.

About the Author

John D. Thrailkill

Marketing Manager, Advanced Illumination

John D. Thrailkill brings over a decade of experience at Advanced Illumination to his role as Marketing Manager. His initial start in engineering, working on product designs, was followed by time spent in the applications lab, giving him firsthand insight into both the technical aspects and practical applications of machine vision technology.

Today, he leverages this deep understanding to identify market needs, anticipate industry trends, and develop marketing strategies that effectively communicate the value of machine vision solutions to engineers and technical professionals.